Operating System (OS) with 32 bit will stop working after 19 January 1938....

Do you know when the 32-bit OS will stop working?

ORWhen will 32-bit computers stop storing data?

- Before moving forward, it's always good to know the content:

- When will 32-bit Unix Based OS will stop working exactly

- Why did it happen on the exact time and date?

- What are bits and the Maximum value of standard bits?

- Obviously, 32-bit has such a big capacity to store data/values, so what type of storing data or values will lead to cross 32-bit maximum value?

- Why time has to be memorized by OS. Is there any significance of time as such?

- Is there any particular date from when OS has to remember the time?

- Why 1901 is considered to be the birth or origin of computers??

- And in what unit is the time being stored in a 32-bit Unix-based OS ??

- Calculation of seconds to be stored from 1901 till 1938?

- What are the Devices that will be impacted by this ??

Note: OS refers to 32-bit Linux or Unix-based OS.

When will 32-bit Unix Based OS will stop working exactly?

19 January 2038, at 03:14:07 UTC

Why it will happen on a particular date and time( and a particular second too)?

Because at that particular time, the 32-bit will be exact to its maximum value. After one second later it will cross its maximum value. Due to this, it won't be able to store further value of time.

Extra knowledge

Techie detail:

When it gets to Tue, Jan 19 03:14:07 2038, the next second(03:14:08) will be Fri, Dec 13:45:52 1901. Can you see the difference in the day & date at the 7th and 8th second respectively? At the 7th seconds it's a Tuesday, Jan 19 03:14:07 2038, and just one second later at the 8th second it's a Friday, Dec 13 20:45:52 1901. However internally, if the code calculates the difference of time from Tue, Jan 19 03:14:07 2038 to Fri, Dec 13 20:45:52 1901, this is still just 1 second because that is how the numbers work internally - that is what the roll-over is about.What are bits and their Maximum values?

Before moving forward let me introduce you to the basic terminologies:Bit or bit: a single block([]) or one container in which either 1 or 0 is stored.

Similarly,

2 bit means 2 blocks( [], []) or simply 2 containers in both either `0` or `1` can be stored.

Similarly, there are some standard bits in the OS world.

Standard bits:

Let's look at the standard bits, such as 4-bit, 8-bit, 16-bit, 32-bit, 64-bit and 128-bit.4 bit:

4 containers ( [], [], [], [] )8 bit:

8 containers ( [], [], [], [], [], [], [], [] )16 bit:

16 containers ( [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [] )32 bit:

32 containers ([], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [])64 bit:

64 containers,( [], [], [], [], [], [], [], [], [], [], [], [], [], [], [], [] . . . . . . . [] )Each container or block allows storing either 1 or 0

Extra Knowledge:

To know how numbers are converted into binary, switch here. And from binary to decimal, switch here.But why does the OS store data in the form of binary ??

It's because the hardware understands either `True` or `False`, `1` or `0`, and switches `ON` or `OFF`.

Similarly, the hardware of a laptop/computer is understood in a `binary` format( 0 or 1) only. Whereas it doesn't understand human-readable `numerical` format(1,2,3,4,5,6,7,8,9,0). Basically numerical format is first converted into ASCII values and then converted into binary format.

Let's get back to our topic and talk about the `maximum` value that can be stored in standard bits.

The maximum value of standard bits?

Maximum values that 4-bit, 16-bit, and 32-bit can store?Condition of the maximum value in any bits: `When all containers or blocks are filled with 1`

For example:

In `4 bit`, the `maximum` value that can be stored is: `15`

[1][1][1][1] = 1 x 2^3 + 1 x 2^2 + 1 x 2^1 + 1 x 2^0 = 8 + 4 + 2 + 1

Similarly in `16 bit`:

[1][1][1][1][1][1][1][1][1][1][1][1][1][1][1][1] = 1 x 2^15 + 1 x 2^14 + 1 x 2^13 + 1 x 2^12 + 1 x 2^11 + 1 x 2^10 + 1 x 2^9 + 1 x 2^8 + 1 x 2^7 + . . . . . + 1 x 2^3 + 1 x 2^2 + 1 x 2^1 + 1 x 2^0 = `65535`

Similarly, in `32 bit`:

[1][1][1][1][1][1][1][1] . . . . . . . [1][1][1][1][1][1][1][1] = 1 x 2^31 + 1 x 2^30 + 1 x 2^29 + . . . . . . .

+ 1 x 2^3 + 1 x 2^2 + 1 x 2^1 + 1 x 2^0 = 4,294,967,295 = approximately `429 crores`.

So, finally, we came to know about the maximum value that 4-bit, 16-bit, and 32-bit can store. Now you tell me, when will 32 bits stop working. So your answer will be:

And what type of data it will store. Obviously, 429 crore is such a big number?

The data is time which is to be stored in seconds since 1901`. And we all know time always increases, so its value will keep on increasing further.

Why time has to be memorized by OS. Is there any significance of time as such?

For now, accept that Time plays a very important role in operating systems. For [more].Is there any particular date from when OS has to remember the time?

It's from 1901.Why 1901 is considered to be the birth or origin of computers??

It's because 1901 is considered as the reference time for the origin of Unix-based OS, In actually Unix-based OS was born around 1970. See, basically we count birthdays on that particular day on which you were born. In Linux, since the time is being counted from 1901 as a standard just for sake 1901 year is called as the origin of the computer because of the time of the Unix-based 32-bit OS is considered 1901 year as the origin.And in what unit the time is being stored ??

It's a small unit of time called a second.Calculation of seconds to be stored from 1901 till 1938?

Oh got it that the Unix-based 32-bit OS has been storing time in seconds since 1901. For example, suppose the 1st day passed after 1901, then 32-bit OS has to remember total seconds equals 24 hrs x 60 min x 60 sec = 86400 seconds. This number can't even be stored in a 16-bit register as it crosses the maximum value of 16-bit, i.e. 65535. Now suppose a year passes w.r.t 1901. This means you are in 1902. The total number of seconds a 32-bit OS has to be stored is 365days x 24 hrs x 60 min x 60 seconds = 31,536,000( ~ 3 crores)Look at the number, are you able to digest how big this 31,536,000 number is.

Now let's calculate the total number of seconds for 10 years: 10 x 31,536,000 = approx 31 crores.

As we know the maximum limit of 32 bits is approx 429 crore. Are you able to feel how fast within the coming years it will surpass 32-bit maximum numbers.

It has been said that in the year 2038(19 Jan) the 429 crore number will be surpassed.

Let's feel it and prove it.

The difference in years b/w 1901 to 2038 is 138 years. And if you talk about the actual value of seconds passed in 138 years, plus 18 days and time at 03:14:07 UTC(i.e.). But don’t get into actual value, just remember the approximate value of the second in 137 years.

1) Total passed years = 136. ---> Total second = 136×31536000 = 4,288,896,000 ~ 428 crores

2) And on the 137th year, on 19 Jan at 3:14:7 second. = 18×24×60×60 seconds = 1,555,200 ~ 10 lakhs

Total seconds from 1901 to 19 Jan 2038 = 429(428 crores + 10 lahks) crore = Maximum value of 32 bit = all 32 boxes or containers filled with 1. To store more values needs more containers or boxes. That's why 64-bit was considered over 32-bit.

And max value of 32-bit is also approximately 429 crore. And as the 32-bit OS starts storing values greater than say 429 crores then it has to shift from 32-bit to 64-bit to store values greater than 429 crores.

That's the exact reason why 32-bit Unix-based OS will stop working after 19 Jan 2038 at around 3 AM, 14 minutes,s and 78th seconds.

What are the Devices that will be impacted by this ??

- As far as operating systems are concerned, Windows is okay, its operating system used a different method for dates from the start.

- There are still some issues for 32-bit versions of the Linux operating system but they are being fixed. It should be okay by 2038 so long as you are able to update to the 64-bit operating system.

- Android is based on Linux, so if you have a 32-bit Android device then the operating system might not be able to handle dates beyond 2038 - but if not this should be fixed well before 2038.

This was all from this blog, hope you get to learn something interesting today. That's all from my side keep learning and keep upgrading yourself.

Last but not least, let me know if you found any error in the blog through comments. That would be really appreciated ☺️

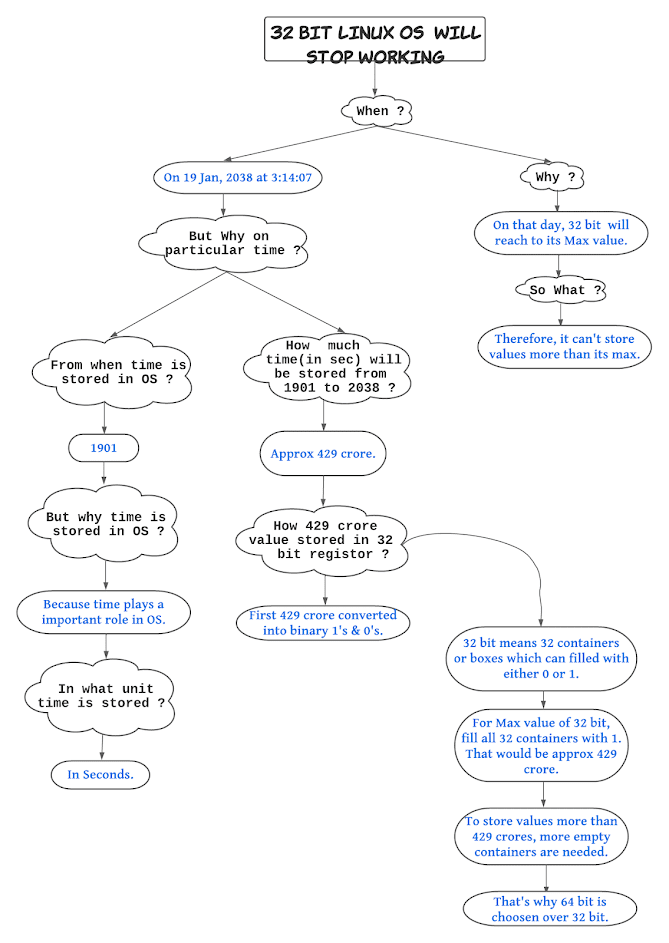

Keep this flow chart for future reference.

https://lucid.app/lucidspark/ba0c97cb-9a4c-42e5-8972-d7e539e1e378/edit?invitationId=inv_c1752b95-4a22-4f5a-be11-e59631353f0b#

References:

- https://en.m.wikipedia.org/wiki/Year_2038_problem

- https://betterprogramming.pub/the-end-of-unix-time-what-will-happen-1b1a25ec1c20#:~:text=The%20most%20imminent%20overflow%20date,03%3A14%3A07%20UTC.

Comments

Post a Comment